Ashunara - UE5 Audio Programming

This year, I explored alternative career paths and decided to use my knowledge in Gameplay Development to kickstart my Audio Programming journey. While teaching myself using resources such as articles and videos, I documented my work on YouTube so that I could give back to the community & build my portfolio.

FINAL PROJECT SHOWCASE

OVERVIEW:

As part of my final project for University, I had some fun encapsulating some of the techniques and features covered over on my YouTube channel while simultaneously exploring new technologies such as the Soundscape subsystem, alongside Gameplay Tags and DataTables. By passing data gathered from the environment around the player into the spatial hash, the colour-point system facilitated conditional sounds. With this approach, I enabled compatibility for procedural worlds (see the new 5.2 PCG tools).

In essence, ~90% of the ambient sounds in the project required no manual placement, and all were spatially generated at runtime to the respective surrounding environment e.g., birds in a high density of trees, frogs in swamp-like areas, bees in high densities of flowers, crickets in high densities of grass, etc. This took a lot of weight off my shoulders, broke up the monotony you'd experience with traditional methods, and ultimately increased immersion.

CHALLENGES:

I would say that the most challenging aspect of this project was getting the Soundscape subsystem to work alongside foliage. Because of how Unreal batches static meshes for hardware instancing (I decided to use Static Mesh Foliage over Actor Foliage for performance, read more about it here), this meant that I had to find a way to retrieve information from those instances. Fortunately, I was able to use the hit item as the instance index when casting to FoliageInstancedStaticMeshComponent and use the Instance Transform Location as part of a vector array. As with many things, there are several ways to skin a cat, so Get Instances Overlapping Sphere would have also sufficed. I am fortunate to have worked with HISM's beforehand in this replication proof of concept, which shows that constantly challenging oneself for the sake of knowledge can be beneficial.FEATURE HIGHLIGHT BREAKDOWN [1] :

• [00:31] Dynamic Footstep Volume - Footstep volume was mapped to player ground velocity. This enables controlled scaling at any player speed (good for sneaking, sprinting, and accelerating/decelerating).

• [00:31] Labored Breathing - Subtle spatialized laboured breathing. The volume for this feature was also mapped to player ground velocity, however, it was important for this sound to be relatively quiet as breathing can quickly become obnoxious in video games when done incorrectly.

• [00:39] Independent Footsteps - Independent traces were triggered from an animation notify track. The respective feet stored a bone on contact, allowing accurate auditory representation of the surface material in question. E.g., left foot on grass, right foot on stone. In situations where the player landed from a jump, both bones would be stored.

• [00:43] Velocity & Friction-based Skidding - After adjusting friction control settings to allow skidding, UE5.1 Enhanced Input Actions & Mapping Contexts were used to detect an abrupt input release. Following this, I simply checked if the player was in the air, and if not, we could play a skidding sound with volume mapped to ground velocity once again (for dynamic purposes).

• [00:52] Depth-based Footsteps - Three different footstep Metasounds were made to accommodate different depth ranges: Shoreline, Medium, and Deep. When in deep water, footsteps are generally occluded, so what you hear is closer to a sloshing noise to a splashing noise. This was done by blocking a trace channel on the water surface, measuring the distance of the trace, and mapping this distance to three different ranges for the respective depths. This check was only carried out when the player is overlapping the water, we don't want to be doing this check when travelling on dry land for performance reasons.

• [01:03] Locally Saved Class Control - With a SaveGame object, numerous variables could be locally saved, such as volume across a range of classes, and even things such as sensitivity. When the game is initialized, we simply check if a save exists in a slot; if no save exists then we create one, and if one exists then we retrieve it.

• [01:27] Miscellaneous Environment Sounds - These constitute the 10% of sounds not handled by the Soundscape subsystem (things like fires, rivers flowing, and even a stationary crow). It was important to manually place these for spatial accuracy.

FEATURE HIGHLIGHT BREAKDOWN [2] :

• [00:00] Main Menu Button Interaction - Having a subtle "click" sound when hovering over buttons is fairly commonplace among games nowadays, so I wanted to push this a bit further. When hovering over the PLAY button, the sound of a stream gradually increases; when hovering over the EXIT button, the sound of a crackling fire gradually increases (I found fire appropriate for the exit functionality as it has connotations of danger and destruction). Both gradually decrease upon un-hover.

• [00:13] Sound Ducking - For sequences such as speaking, it can sometimes be appropriate to lower background sounds so that the player can clearly hear what is being said. This process is known as ducking, and in my case, I ducked the music and the environment. This was done using a passive mix, meaning that anytime the game detected speaking, it would automatically duck other sounds. Note that ducking can be done manually by pushing and popping mixes.

• [00:22] Underwater Effects - On the topic of pushing mixes, when the player camera enters the water, we can push a mix that applies a low-pass filter and trigger an underwater loop. This is a relatively simple process that adds an extra layer to the audio in our environment and can be taken even further for more realistic results.

• [00:34] Water Entry / Exit - While we are talking about water, entry and exit sounds were achieved using the player Z velocity. This allows large and small splashes depending on how fast the player collides with the water.

• [00:48] Gameplay-driven Events - Audio typically sits in the background of most games, subconsciously absorbed by the player. I feel that this is part of the reason why the field of Audio Programming is so niche and underappreciated. To address this, I added an easter egg that triggers a conversation between the player and the spirit of the shrine that ties in with the lore of the hallowed grounds.

• [00:59] Soundscape Additions - A couple of sounds were added to the cave palette to bring life to the environment. This was enhanced by the usage of an audio volume containing a reverb preset, consequently producing an echo for all sounds in the area. These additions included a bat flapping its wings and debris falling on the ground, both of which made use of colour height settings (bats would spawn above the player, while debris would trace to the ground to simulate debris hitting the floor).

• [01:18] Miscellaneous Environment Sounds - The third environment allowed for some opportunities to incorporate appropriate environmental sounds, as well as make use of soundscape in a rather distant manner. Besides the wooden structures creaking and the flags blowing in the wind, 'corpses of the past' produced spatialized battle noises to represent the violent proceedings. An explosive barrel was also added to impend a sense of danger onto the player, as well as to practice an audio effect that many people who have experienced loud noises have reported experiencing. Lastly, if you listen carefully to the top showcase video [01:13], you will notice that there are spatialized ambient explosions that are intermittently spawned a couple of thousand units away by the subsystem.

ASHUNARA PROJECT PLAYTHROUGH & REFLECTION:

I have included a complete playthrough of the project below for those interested. Given more time, I would have liked to have included dynamic ambience that interpolates between Soundscape palettes depending on the time of day e.g., bird sounds are replaced with owls, other animals are muted to convey that they are asleep, background cricket ambience is slightly louder, etc. Furthermore, I would have liked to create a procedural bird Metasound as I felt that some of their calls were bordering repetitive.

Please note: This project is not intended to be a game; gameplay only exists to entertain the player at a base level and justify environment exploration (so that they can experience the work I have put into populating the scene with sounds). The purpose of all work presented in this submission is to demonstrate Audio Programming practices that can be applied to games.

CREDITS:

🔊 Main Menu MUSIC - Arctic Station by Crypt-of-Insomnia

🔊 Cavern MUSIC - Into The Gallows Raw - Dark Violin Chant Cemetary Copyright Royalty Free Music and Footage

🔊 Castle Memories MUSIC - DARK FANTASY MUSIC | ROYALTY AND COPYRIGHT FREE

🔊 Corpse Battlefield SFX - D&D Ambience - Full Scale War

🔗General SFX [1] - Ultimate SFX & Music Bundle - Everything Bundle

🔗General SFX [2] - Zapsplat

◻️ Forest Environment Assets - Fantasy Forest - Forest Environment

◻️ Cavern Environment Assets - Ancient Cavern Set III

◻️ Castle Memories Environment Assets - Siege of Ponthus Environment

◻️ Animal Assets - ANIMAL VARIETY PACK

◻️ Water Assets - Cartoon Water Shader

◻️ Player Character - Gladiator Warrior

◻️ Miscellaneous Assets - Infinity Blade: Props

◻️ Ruins Assets - Ancient Ruins

🔗 Icons - Game-Icons

🔗 Fonts - Diverda Sans | Raylish

AUDIO PROGRAMMING R&D

OVERVIEW:

Before undertaking the project above, a foundation of Audio Programming knowledge was needed. I decided to choose tasks based on resources I could find online and interpreted them in my own way. Due to the nature of audio work, it goes without saying that images alone are not enough for documentation, so I landed on YouTube as my platform of choice.

EXPERIMENTATION SHOWCASE:

• [00:00] Adding Audio to Footsteps - Perhaps one of the most basic yet important pieces of audio that I would argue should be added to any game would be footsteps. The sound our feet make when they come into contact with the floor is among the most common sounds the human body makes when interacting with our immediate environment (alongside breathing and talking). One could argue that it is technically not our body making the sound, but instead the surface itself, but that does not change the sheer scale at which we experience the phenomenon. We can observe this in a game as minimalistic as Minecraft, where the only sounds that the player's body produces (excluding tools) occur when traversing (walking, sprinting, swimming, etc.), consuming, and taking damage. Although I had already implemented footsteps in previous projects, I carried out research regardless to refresh my memory as well as observe the practices that others were using. There is a myriad of videos on footsteps, so I only took to observing a few, yet no matter the video, the approach was generally the same.• [00:23] Adding Reverb with Audio Volumes - In order to achieve some acoustic accuracy, I made use of the work on footsteps and began to research ways to create some reverb. The reasoning behind exploring reverb is mainly because I would like some varying acoustics in my final project, and the only way I could think to improve upon this would be to simply create different spaces. I have chosen not to create different spaces on my YouTube as set dressing can take up much-needed R&D time, and I would only be changing a few float values in the attenuation settings between them.

• [00:40] Audio Occlusion with Low-pass Filters & Spatialization - As this video states, the audio occlusion system built into Unreal Engine is "super simple". This is great for those working on smaller projects, but for a professional level of work, I was unhappy with the number of options provided. The problems are as follows:

The detection is binary. By this, I mean that either the sound is occluded or un-occluded at any given time, you cannot have a sound be half occluded. While Unreal does give an option for interpolation (gradually going from 0% occlusion to 100%), you cannot sit between those values.

Because of binary detection, some objects (such as the chest in the video) would trigger audio occlusion, causing extra work for a developer such as myself to have to add an exception.

With these issues in mind, I planned to make a system in the future that handled occlusion in a better way, making use of existing methods and innovating at the same time.

• [00:59] Doppler Effect - From Wikipedia - the Doppler effect "is the apparent change in frequency of a wave in relation to an observer moving relative to the wave source". With this in mind, I was surprised when I discovered that such a complex auditory interaction had been simplified to one node in Unreal Engine. I tried this out myself and found a major flaw - rapidly moving the camera around the player distorted the sound. This is likely because the built-in system made use of the audio listener's location (in this case, the camera) in its calculation. To fix this, I would calculate the Doppler effect manually using resources found online. Not only had this been done before in Unity, but the exact same problem has been accounted for in this blog, proving that this undertaking would be possible.

• [01:09] Spline-based audio as a potential solution to more complex shapes - To achieve audio playing along a complex shape such as a river, I resorted to making use of spline-based audio. I later discovered a major problem with this approach - on sharp angles, the position of the audio would jump from one place to another. One potential solution would be to avoid spline-based audio entirely and simply place sounds at roughly equal distances along the river. This would not only be better for performance but also more spatially constant.

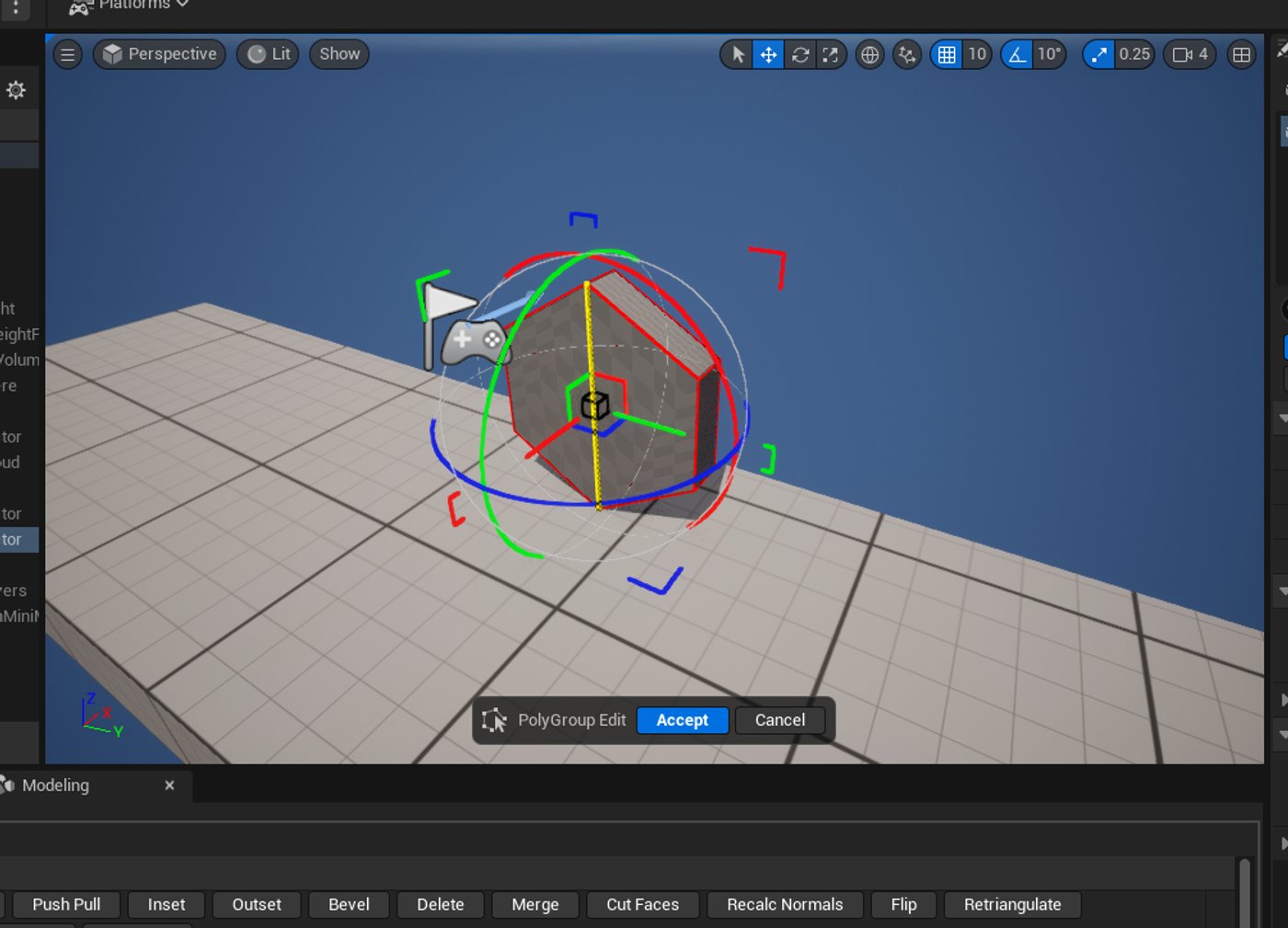

• [01:23] Custom Audio Volume Shapes using UE5 Modelling Tools - A small yet important quality-of-life feature concerning audio volumes was to use the Unreal Engine modelling tools to change the shape of an audio volume to fit a building or other space. I would have never thought that these two tools worked in conjunction with one another, and is a great example of how there is always something to learn in your primary suite. I discovered this video that nicely explained the process, and made use of the reverb effect from the previous task in this intermediate demonstration. If I were to go back and do something differently, I would likely explore some more acoustic effects rather than relying on one I already had made, as well as try out the relatively new audio gameplay volumes.

• [01:37] Ducking Audio using Sound Mixes & Sound Classes - Following research into mixing audio in Unreal Engine, I attempted a version of this myself that made use of both pushing and popping mixes, as well as using a passive mix. This meant that I could manually push a sound mix (e.g., when an explosion occurs and the player is too close), or automatically trigger it (e.g., when an NPC talks the background music will lower in volume).

• [01:47] Reducing system load with Concurrency settings e.g., culling with max counts - When playing a large number of sounds, there comes a point when the player can no longer hear the difference even if you were to place a limiter. This technique is called concurrency and is great for optimization. Note that sounds can be prioritized over others e.g., in a competitive game you may want footsteps to be more important than distant explosions.

FLAMETHROWER DEMONSTRATION (METASOUNDS):

As part of a Lecture Series that the University had proposed, I decided to contribute by creating a resource that forwarded my knowledge in Audio Programming. I chose Metasounds for this, as delivering a lecture that required additional software would cause unnecessary hassle, especially given the short time span that we had. Before delivering the lecture, I put together a YouTube video on the subject so that my work could also contribute to my portfolio. This video was informed by a few pieces of research (as I had not dived too deep into metasounds beforehand), and I wanted to put myself in a position where I could answer questions from anyone who was interested in the tools I would be demonstrating:

• Getting started with Metasounds - Useful for learning basic implementation & navigation.

• Intro to Metasounds - A bit more in-depth than the above, making use of some parameters, and demonstrating some possibilities.

• Practical Metasounds designs - The squeaking door example from the above video piqued my interest, so I began to research some existing designs to get a better handle on what kind of systems this tool was capable of.

• Weapon sound design - An informative talk on using metasounds with a sound design-focused approach, useful for taking audio implementation one step further.

• From Miniguns to Music - Another great resource for learning the basics and getting a grasp of the use cases. I used this video in particular as a foundation for my example.

• Endless Music Variations & Gameplay hooks - I had no plans for such a system, however, I was still curious about what was possible when dealing with music.

• Metasounds reference guide - A complete collection of all Metasounds nodes that I found useful for making more concise and complex systems. It was not until I watched this video on dynamic ambience later on, did I discover that you could use the trigger route node in combination with an interpolation node to achieve a fade-in / out effect with values of your choice. This would be a great alternative when you want a linear interpolation that does not rely on a timeline.

• Accessing the Output Values - I encountered an issue that did not allow me to trigger events at certain points during a Metasound, so a quick search concluded that this feature was not currently supported.

• Will Metasounds replace Wwise or FMOD? - Rationalizing the usage of the tool in comparison to what I was previously learning.

Overall, I believe that the resource I created was well-informed, and demonstrated an understanding of the subject area at a rudimentary enough level that a layman could approach. If I were to have more time to work with Metasounds, I would love to explore some procedural and adaptive music using packs such as these.

OVERWATCH-INSPIRED POINT CAPTURE SYSTEM (METASOUNDS):

After playing some Overwatch on my time off, I noticed an audio system that I thought Metasounds would be great at replicating - the point capture system. I began by breaking this up into a few parts:

• A constant humming noise would be used while capturing/neutralizing a point, increasing and decreasing in pitch.

• A beeping sound would signify the presence of an enemy on the point (contesting).

• Two different capture sounds for an ally capture and an enemy capture.

After I had a collection of sounds ready, I put together the system as shown in the video. There were a few reasons behind the decision to make this, such as the usage of the trigger repeat, combining gameplay programming with audio programming, and taking something that already exists and demonstrating that I could recreate it successfully using knowledge gained from research.

UE5 & WWISE [1] - RTPCs:

After speaking with a couple of industry workers in the field of audio (both on-site and by reaching out on platforms such as Discord), as well as identifying common requirements across job listings, I decided to begin a series on Wwise, a popular audio middleware software.The video below uses RTPCs (Real-Time Parameter Controls) to drive a low-pass filter on all audio in a bus, creating a muffled underwater effect. During this process, I also found out that some audio was peaking due to high gain, a problem I later learned to solve using limiters. Lastly, I decided to add flavour to the environment by demonstrating the selection of appropriate sounds, a much-needed skill for someone in my position with limited facilities and resources to produce my own samples.

UE5 & WWISE [2] - FOOTSTEPS:

I have already covered the topic of footsteps in general so I won't go into an unnecessary exposition, however, I will mention that I was happy about how easy this aspect was to pick up and integrate. I found that the switch groups in Wwise worked similarly to flow control in Unreal, and I was even able to adapt my independent system from before to work with Wwise.

UE5 & WWISE [2] - BASIC REVERB:

The process here is once again similar to the reverb covered before (see this video), but I want to reflect on a potential missed opportunity here.

Unlike Unreal Engine, Wwise provides numerous reverb pre-sets, such as hallways, cathedrals, small rooms, large rooms, etc. Given more time, I would have liked to create some spaces that helped visualize the difference in settings. I have found that simply looking at numbers on a list does not compare to hearing the difference inside the respective environment.

UE5 & WWISE [4] - VOLUME SLIDER CONTROL:

A common complaint in some of the games I have developed both independently and collaboratively is a lack of volume control. Due to a fast development cycle, there is not often enough time to add such a quality-of-life feature. Fortunately, this series gave me the perfect opportunity to put into practice a set of skills that would alleviate some of my previous struggles.

The volume control differed from the other videos in that it relied entirely on the knowledge I had gained up until this point (instead of using secondary research). While I did achieve the desired result, I do also wish that I had gone back and explored some existing methods after attempting my own to identify areas for potential improvement.

UE5 & WWISE [5] - CONCURRENCY OPTIMIZATIONS:

It was no surprise that a piece of audio middleware such as Wwise offered greater control over memory optimizations, and as these two sources from Audiokinetic showed [1][2], the user has control not just over playback limits, but also priority and platform-specific instances.

UE5 & WWISE [6] - SPLINE/PATH-BASED AUDIO OCCLUSION:

As previously mentioned in my occlusion analysis, I wished to make an occlusion system in Unreal that made up for the shortcomings of the engine. Numerous content creators have taken to this task, such as Scott Games Sounds' video on occlusion with FMOD and Unity, which also lead me to this resource on how Quantum Break handled their occlusion that I would later make use of. More intriguingly, however, was a system made by Alex Hynes on Spline-based occlusion that was inspired by how Overwatch handled their sounds, as covered in their GDC talk.After carrying out this research, I began undertaking the task of creating the system. This would differ from Alex Hynes' system as it did not require any extra level design work (blocking off floors/ceilings), mainly due to the security measures I had built into the function. I won't explain the system in too much detail here since the result is visible in the video below, however, I will identify the challenges I faced when preparing something as complicated as this.

I knew that, unlike my other videos, subtitles would not be enough to accompany the nearly 10-minute recording. After writing a script, I took to recording myself using Audacity, which I would then import into Adobe Audition. Inside Audition, I applied a variety of effects using both built-in tools and plugins. These would solve some problems I experienced such as background noise, audio peaking, and audio boxiness, as well as improve quality and consistency with some equalization (EQ). It was clear that after these steps my audio sample was of much better quality, however, after multiple takes, I found a lack of consistency in the recordings. I identified that the source of this problem was the environment I was recording in (my bedroom); this did not provide the greatest acoustics and is a prime example of why studios exist. I attempted a fix by placing a blanket over my head and a pillow behind my microphone to reduce reflections and simulate an acoustic blanket, which made the recording sound thicker but did not entirely solve the consistency issue. I eventually resorted to using a free online text-to-speech software, and while this lacked some personality, it provided a clear and extremely consistent tone that YouTube's automatic closed captions could translate easily for those hard of hearing.

As mentioned at the end of the video, I concluded that a system based on the thickness of the walls and the material type (as in CS:GO) would likely work better than the spline-based occlusion I had created. Given more time, I would definitely go back and create a system using this approach.

Furthermore, I would also want to integrate this system inside an actor component, rather than a function alone. Successfully doing so would make it viable for a marketplace release, a much-welcomed source of income and recognition.

Lastly, I would make better use of the university recording studio's resources should I ever attempt to record in the future. Following the anguish I experienced trying to mix a sample recorded in a bedroom, I have definitely learned a lesson in the value of proper acoustics.

UE5 & WWISE [7] - CALLBACK MASKS & BITMASKS:

I noticed while exploring some Wwise AK events that unreal supported Callback Masks. Essentially, I could trigger an event with a delegate, and do different things depending on the trigger type. As I had never explored bitmasks before, I saw this as a great opportunity to further my gameplay and audio programming knowledge. Fortunately, I discovered that bitmasks tied in closely with enumeration (a topic I am already familiar with), so I could pick this subject up relatively quickly.

I began my research by reading through the Unreal Engine documentation to get a basic understanding. Then, I moved on to watching this video that covered some methods I would later adopt into my model. I was curious if AudioKinetic had covered anything not present in the Unreal documentation, so I read through their documentation as well and did some further reading on C++ implementation over on the community wiki. Following this research, I concluded that I would use an enumeration switch following the event delegate to drive the actions that were taking place.

Despite successfully producing a working model that performed actions when a sound ended, I would have loved to explore playback markers, something that could be set up inside adobe audition. This would allow me to have events happen at certain points in the sound, facilitating gameplay comparable to that of Hi-Fi RUSH, BPM: Bullets per Minute, or any other rhythm-based game. Obviously syncing up events to a beat manually would be incredibly inefficient and tedious, so a system would likely need to be set up to accommodate for that e.g., the free audio analysis tool on the marketplace.

UE5 & WWISE [8] - C++ INTEGRATION (BASIC POST EVENT):

After browsing numerous job postings, the prevalence of C++ skills made clear the importance of at least a base level of understanding. Despite having explored Python and C# in my previous years of education, I had not taken the time to dive too deep into C++, mainly due to the benefits of rapid iteration using visual scripting and how the university course was structured. For someone in my position, this YouTube series provided more than enough information, and I began to put together a basic demonstration using what I had learned.

UE5 & WWISE - BLENDING BETWEEN DIEGETIC & NON-DIEGETIC:

After browsing some audio-related work on Twitter, I stumbled upon this tweet that demonstrated a dietetic blend in Forza Horizon 5. Since there were no tutorials on the subject, besides FMOD speculation in the tweet comments, I undertook this task using the knowledge I had gained so far. I found that my first attempt made the effect too gradual to notice, so part 2 not only reduced some of the threshold values but also made use of some effects to distinguish the difference between the two tracks.

MISC. DOCUMENTATION

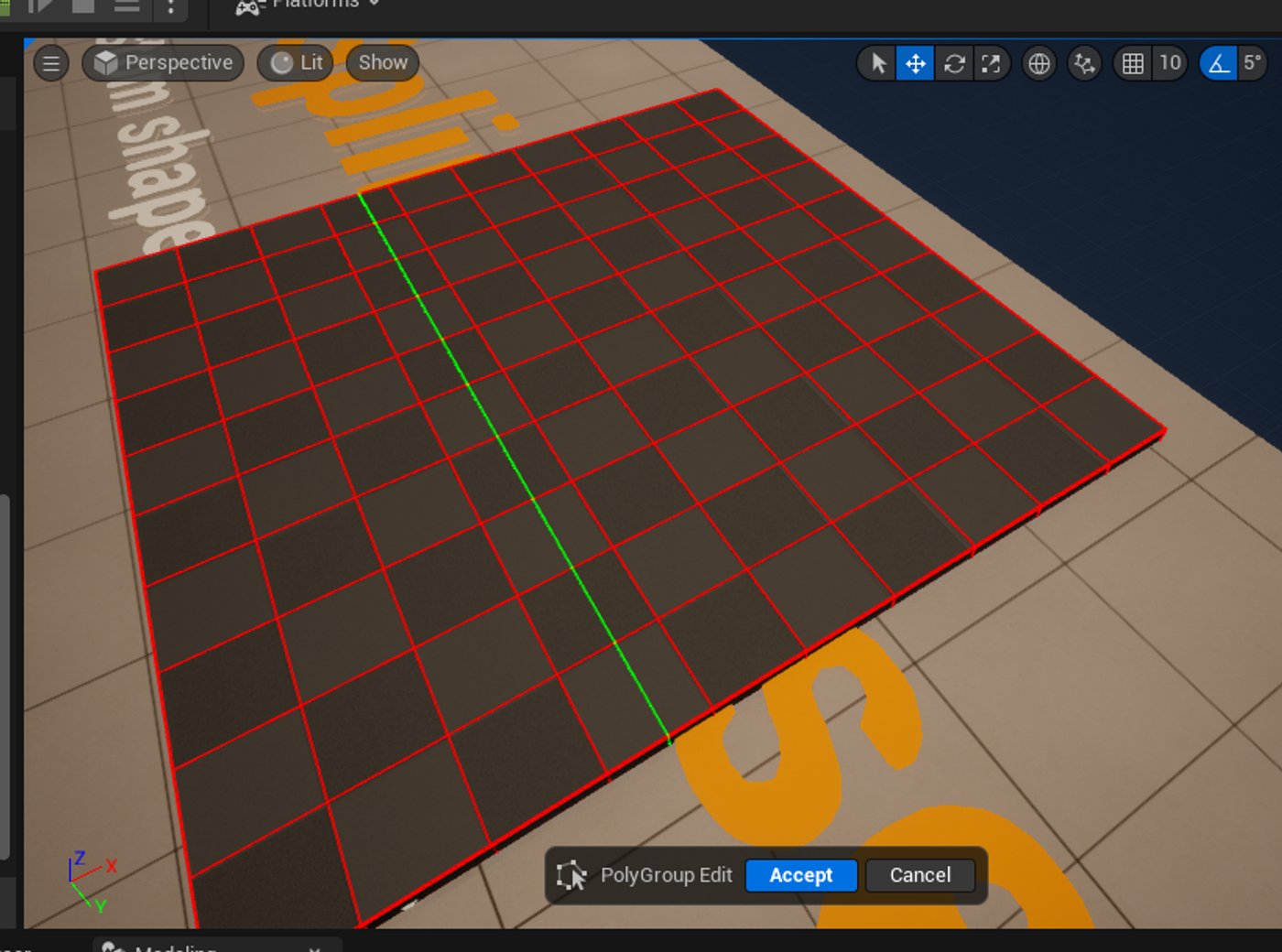

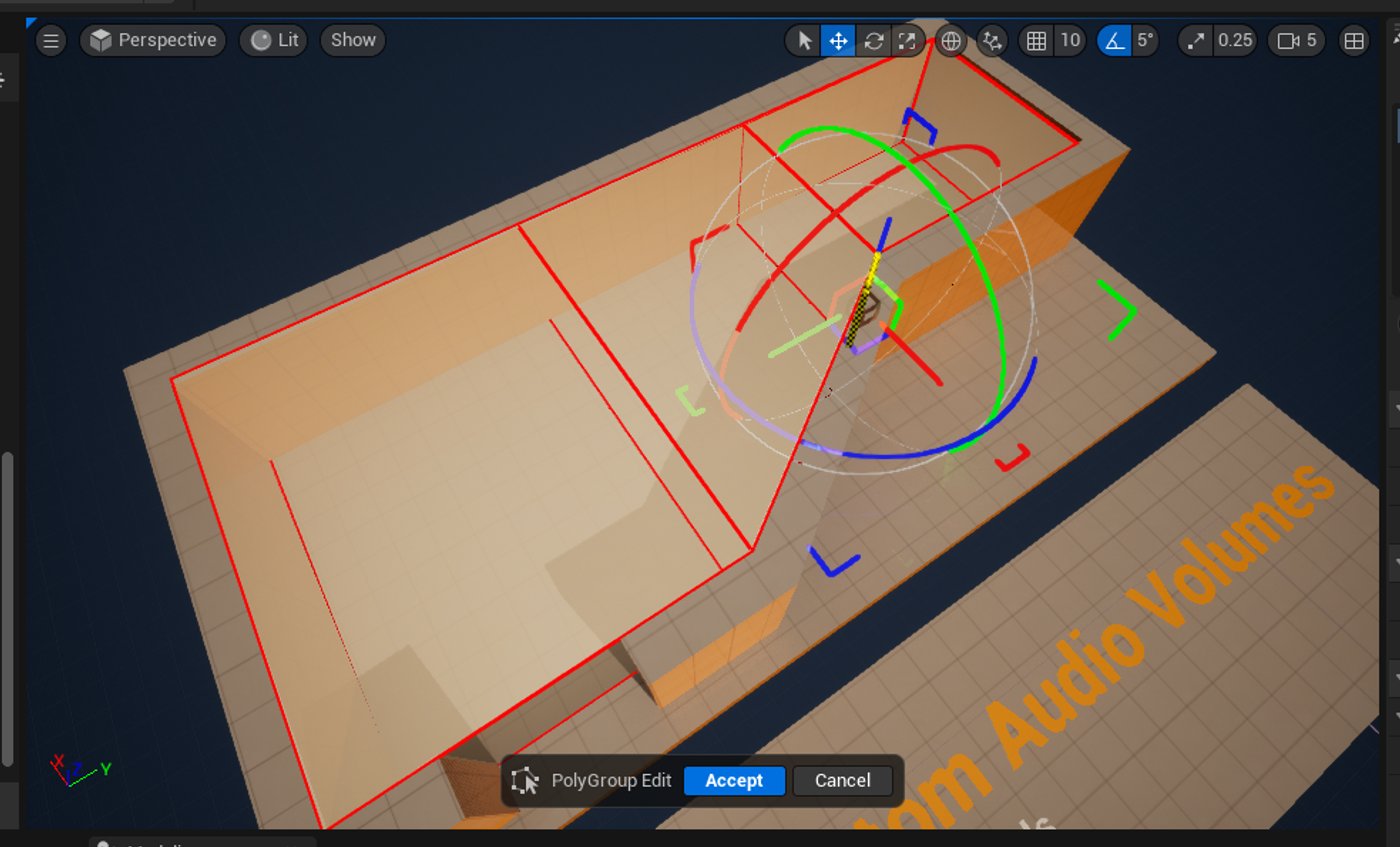

MODELLING TOOLS:

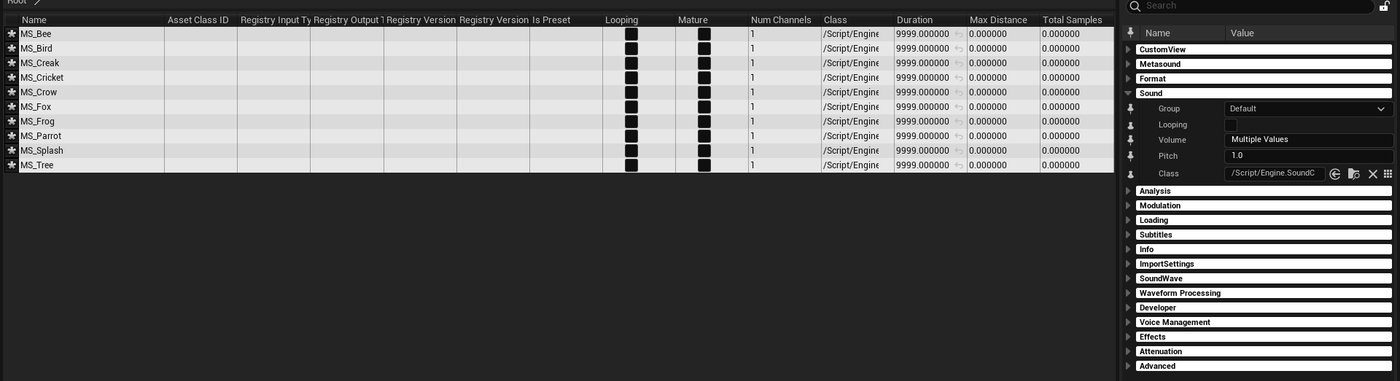

• Subdividing a cuboid in preparation for a spline-based audio presentation that required a bending river.

• Manipulating faces, edges, and vertices on an audio gameplay volume.

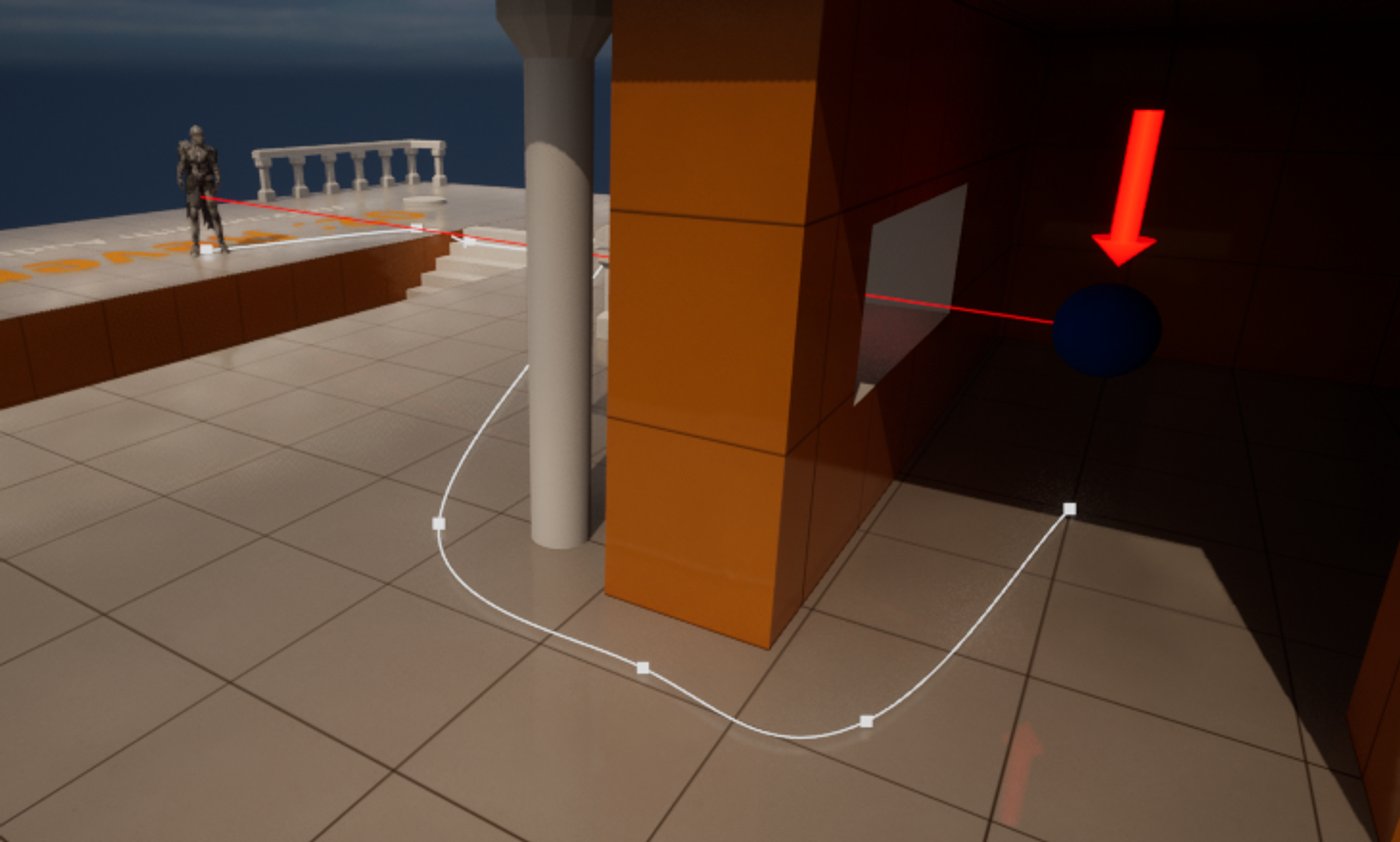

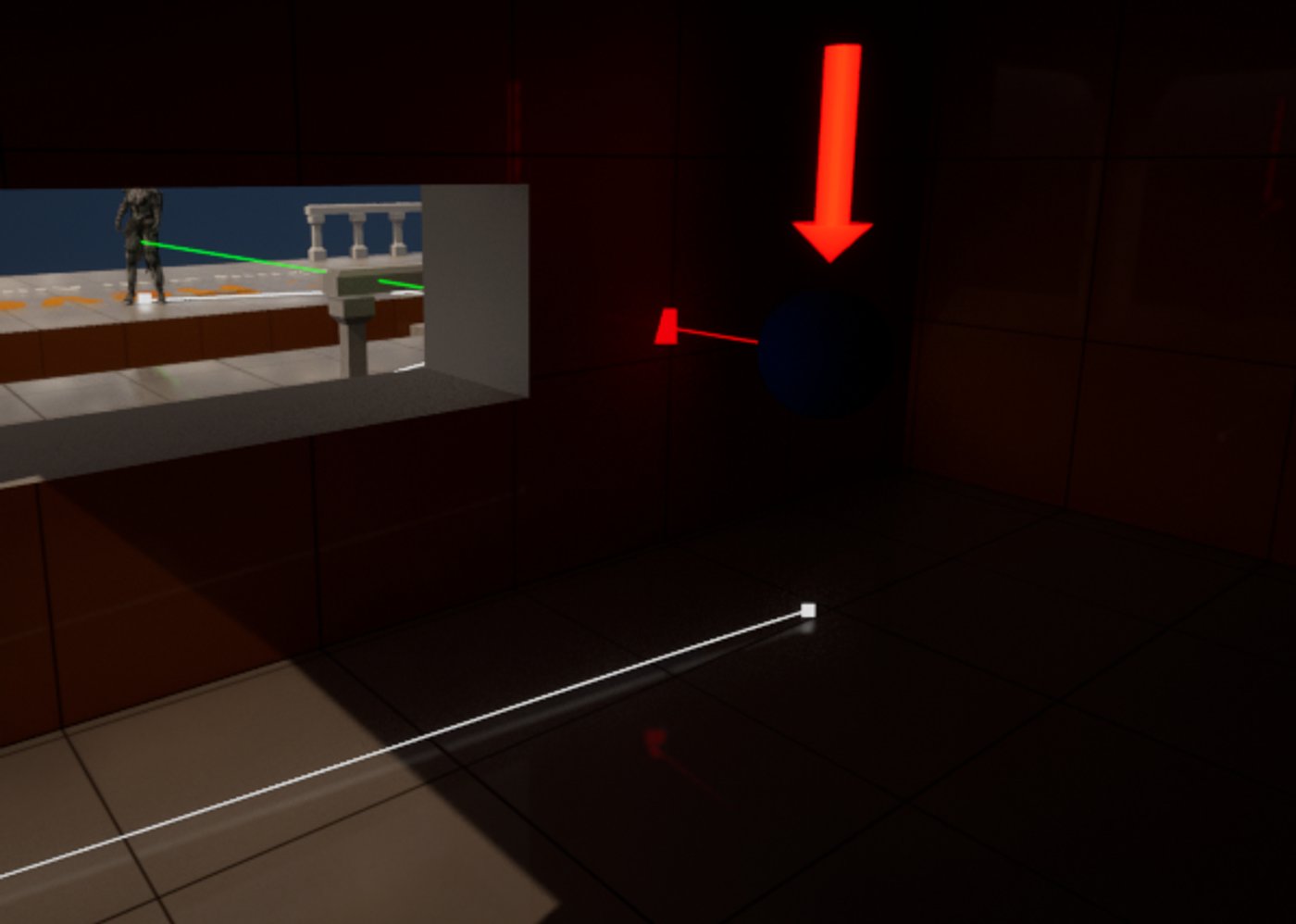

OCCLUSION SYSTEM PREPARATION

• Using splines and line trace visualization to debug and test the early stages of my custom audio occlusion system.

UE5 & 5.1 RETARGETING:

• Using UE5 bone chaining to retarget animations onto a new mesh.

• Using the improved UE5.1 retargeting tools to duplicate and retarget animation assets.

OTHER ENGINE TOOLS:

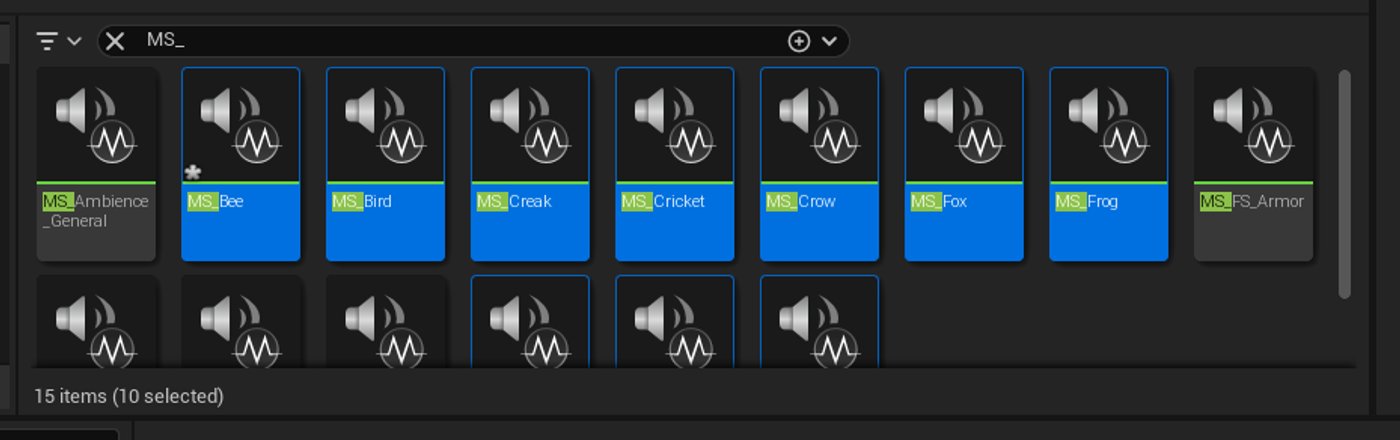

• Bulk editing sounds via property matrix in order to efficiently adjust attributes and apply sound classes.

• Incorporating a cinematic camera sequence in line with the game's audio.

• Experimenting with physics simulation as a technique for corpse posing. See the process demonstrated in this tweet of mine.

• Using unlit mode as a tool to increase visibility and performance while set-dressing and level designing.

FEATURED PLAYABLE PROJECTS I DEVELOPED:

Note: These projects were completed before any Audio Programming Research & Development, and demonstrated an emerging interest in the field.

• ZEN SHARK [PLAY HERE] was a collaborative game made in 3 days from the collective interests of each group member. Gameplay involved the exploration of the East Asian coast, ocean cleanup, and feline rescue. Notable features include a fully procedural and endless world, as documented here.

• NUA.EXE [PLAY HERE] is a clicker game collaboratively made in 2 weeks for the NUA game jam. This project adopts stylized visuals, an interactive environment, and a retentive gameplay loop that satirically encapsulates our time at Norwich University of the Arts. Notable features include a custom-made, easily scalable script to accommodate scientific notation & translation (handling and presenting very, very big numbers).

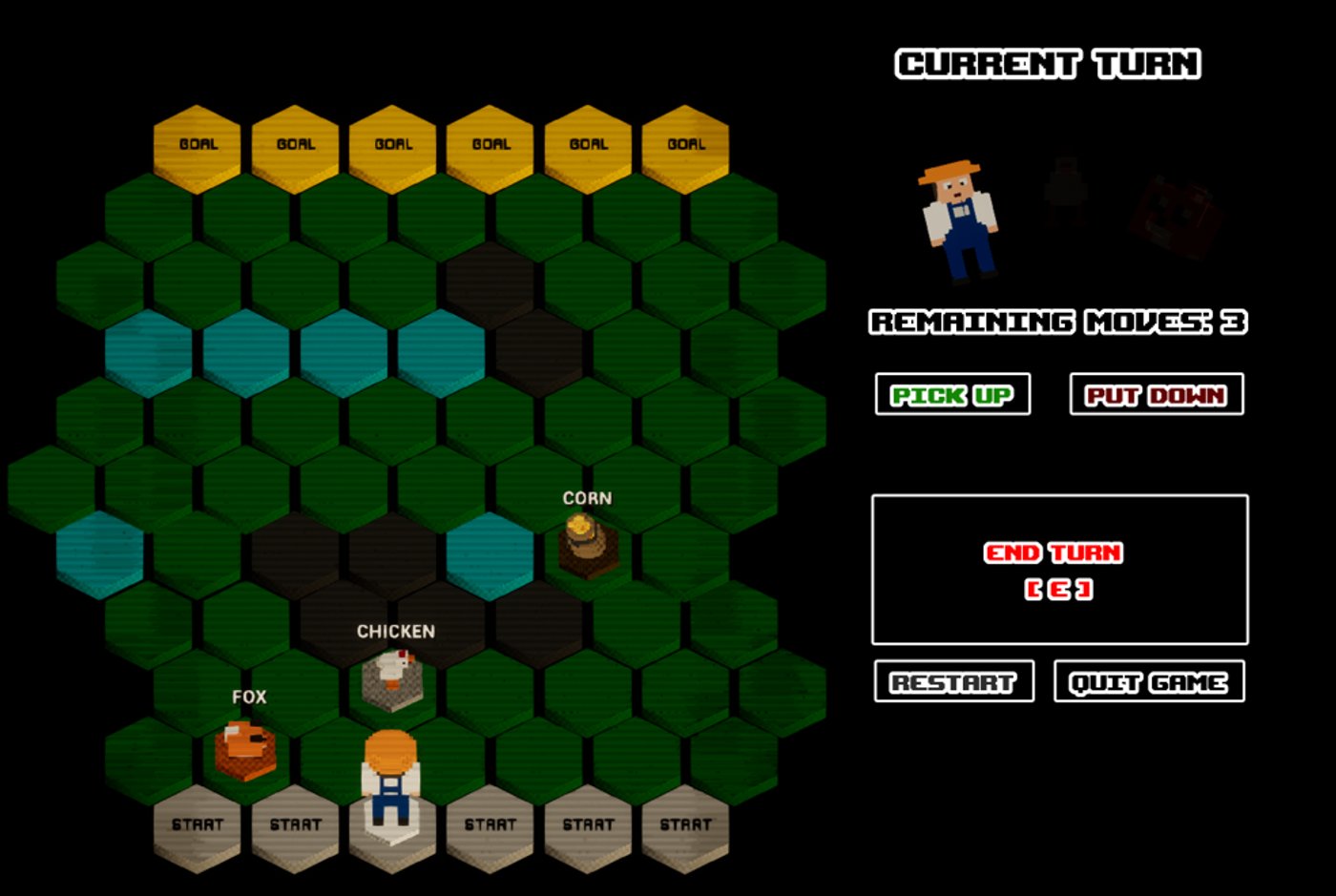

• Hex-grid: Corn, Fox, Chicken puzzle [PLAY HERE] is an independently developed hex-grid adaptation of the fox/chicken/corn puzzle, made in 3 days as a technical demonstration. Notable features include a flood-fill pathfinding algorithm, adjusted to accommodate a hexagonal grid. Find further documentation of the project here, including a video demonstration of the pathfinding algorithm (slowed), as well as a programming retrospective.

OUTRO

PLANS FOR THE FUTURE:

As I graduate from University, I am currently in the rather awkward position of having no industry experience. This is complicating the job-seeking process, as interviews are regularly rescinded, and applications are either rejected or ignored. This is part of the reason I submitted my work to The Rookies - to get my work out there!

In the meantime, I continue to build my portfolio further by addressing some of my weaknesses. I look forward to seeing what everyone else submits!

SUMMARY:

I understand that there is a lot of information to take in from this post, and that's exactly how I feel too. This year has been a very eventful and challenging time as I stepped out of my comfort zone and learnt skills and processes from different disciplines. A huge thanks to all the University staff who supported me on this journey despite how niche and underrepresented the topic of Audio Programming is.

I am more than happy to answer any questions or provide some guidance for anyone looking to step up the audio in their projects, so feel free to contact me on my socials. If you found my work interesting, don't hesitate to leave a comment here and let me know what part you enjoyed learning about the most!

Comments (0)

This project doesn't have any comments yet.